What do participants think of accounting experiments? A preliminary look into ongoing research

Relative to other academic disciplines, the field of accounting has one of the most diverse collections of experimental research, ranging from scenario-based experiments, whose features draw from the judgment and decision-making school of thought (Bonner 2007), to econ-based experiments, whose features are derived from induced value theory and the principle of incentive compatibility (Smith 1976; Friedman, Friedman, and Sunder 1994). Although accounting experiments can vary substantially in the experimental features they use, most of them strive to experimentally test their pre-selected theories as meticulously as possible (Bloomfield, Nelson, and Soltes 2016). However, meticulousness often does not only imply pursuing strong internal validity. Instead, it typically also means recognizing other quality criteria, such as high participant motivation, strong participant engagement (i.e., experimental realism), and, according to some, perceived similarity to practice (i.e., mundane realism).

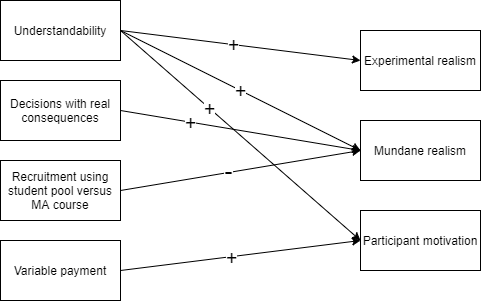

Even if we were interested in upholding some or all three quality criteria, the question arises which features of accounting experiments contribute the most to these quality criteria. Ask any experimental researcher in the field of accounting, and you will undoubtedly receive an informed and thoughtful answer. In most cases, however, they will choose some features (and quality criteria) over others, and their answer will depend on how they have been trained, either in scenario-based or econ-based experiments. If you are lucky, you may find a few experimental researchers who are more eclectic. However, in any case, it will be challenging to answer our question; which features of accounting experiments contribute the most to participant motivation, participant engagement, and perceived similarity to practice?

We are in the process of providing an empirical answer. Our approach is to let the participants of our accounting experiments speak. We have already recruited over 618 individuals who participated in different accounting experiments at Tilburg University. We asked them about their motivation to participate, how engaged they were, and how similar the experiment was to practice. The different accounting experiments varied in their features. Some experiments used variable pay while others did not; some recruited participants in accounting courses while others recruited participants from student pools; some elicited perceptions and judgments while others focused on decisions with consequences for the decision-maker or others; some experiments were challenging to understand while others were easy to understand. Although our research is far from finished, the natural variation among these different accounting experiments already reveals some interesting insights into participants’ perceptions of accounting experiments’ features.

We have four preliminary findings of this ongoing research. First, the participants who were making decisions that have consequences during the experiment for the decision-maker or others rated the experimental setting as more reflective of situations they may encounter in practice than participants who did not make real decisions. Second, participants recruited from a student pool, which consists of students from various disciplines, find it significantly more challenging to relate the experimental setting to practice than participants recruited from accounting courses. Third, variable pay is positively related to participants’ motivation to take part in the experiment. It would not be very reassuring for the field of economics if we did not find this third result. Lastly and most importantly, the understandability of the experiment is positively related to all three quality criteria. As participants rate accounting experiments as more understandable, they are more motivated to participate, are more engaged while taking part, and consider the experimental setting more reflective of situations they may encounter in practice.

So far, our research reveals that understandability is a design feature that contributes the most to all three quality criteria. Accordingly, we can already recognize that conciseness and clarity are important factors when designing accounting experiments. Promoting understandability implies not making accounting experiments more complicated than they have to be, and not including features that are not essential for testing your hypothesis. Concretely speaking, you could consider incorporating a concise and clear set of instructions and informing participants about the nature of the experiment as timely and accurately as possible.

Our preliminary results also suggest an essential role for assessing participant understanding in the ex-post questionnaire. Although the inclusion of manipulation checks is relatively common, statements about participant understanding are not always included. However, in contrast to manipulation checks, such statements are relatively easy to design, and experimental researchers can re-use the same statements for other experiments.

We cannot wait to continue our research into participants’ perceptions of accounting experiments, and we will keep those interested informed about what we learn. We will need a much larger sample to make more reliable inferences. I.e., many of the experimental features we consider vary between experiments, not between participants. Thus, we could use your help! Are you running an accounting experiment in the near future? Please contact us via e-mail! We would love to discuss setting out our survey after your experiment! The survey takes maximum of 10 minutes to complete and can be distributed safely to your participants after you ran your experiment. If you allow us to set out the survey, we will make sure to cite you in the research report of this project.

References

Bloomfield, R. J., M. W. Nelson, & Soltes E. F. (2016). Gathering Data for Archival, Field, Survey and Experimental Accounting Research. Journal of Accounting Research 54(2): 341-395.

Bonner, S. E. (2007). Judgment and Decision Making in Accounting. 1st Edition: Pearson.

Friedman, S., D. Friedman, & Sunder, S. (1994). Experimental Methods: A Primer for Economists: Cambridge University Press.

Smith, V. L. (1976). Experimental Economics: Induced Value Theory. The American Economic Review 66(2): 274-279.

How to reference this online article?

Dierynck, B., & Van Pelt, V. F. J. (2020, October 19). What do participants think of accounting experiments? A preliminary look into ongoing research. Accounting Experiments, Retrieved from: https://www.accountingexperiments.com/post/participant-perceptions/.